What penetration tests have shown me

Having worked with a few cyber security firms over the years, let's take a look at some of the findings.

Over the years I've worked with a few penetration testing companies to get internal systems (or applications) tested. Following on from my post about preparing for a penetration test, I'm going to discuss some key things I've learnt, or had reinforced, by these tests.

The red team always wins

The "red team" is your penetration tester(s) and they spend their days testing systems and learning new techniques. Over time they gain vast knowledge in "normal" system admin behaviour, and by "normal" I don't mean the most secure - I'm talking the short cuts we all take, common configurations (and associated errors) and the things sysadmins don't do because it's complicated or takes too much time. When you consider all of that, is it any wonder they always win? It's not a case of if it's when and how. I've never seen a test where the testers have failed.

Don't believe me? There's a really interesting thread on Twitter where a penetration tester had to try really hard, but still managed to get in (it's a long thread, but the end is worth it):

Singing the Blues:

— Tinker ❎ (@TinkerSec) November 16, 2018

Taking Down an Insider Threat

"I had all of the advantages. I was already inside the network. No one suspected me. But they found my hack, kicked me off the network...

...and physically hunted me down." pic.twitter.com/468Q6C4KR5

Default credentials will be your downfall

admin/admin, admin/<blank>, cisco/cisco - there's many lists of default credentials on the Internet. A simple search for the equipment or software name, followed by "default credentials" will likely yield the answer you're looking for.

Given how readily available this information is, it stands to reason you'd want to change any default passwords as soon as possible. This is becoming increasingly important as we add more devices to our homes as part of the Internet of Things, yet so often passwords are left because they're "easy to remember".

Consider this. I find your device/application during a test and the password is still the default. I can login, make changes, perhaps create another account so I can maintain access. On downloading a copy of the configuration I can read the other usernames and passwords associated to you, perhaps the one for your ISP. If I can't do that, maybe I can make your device connect to something I own so it gives up its (your) secrets that way.

It's an easy fix. Unbox the device, power it on, change the password, configure. The whole process probably takes less than five minutes yet it's so often forgotten.

Vendors could help enormously by setting a random password on each device, and some are moving to this, but it's a long way off.

Systems aren't safe just because they're made by a big vendor

I've met managers who believe in software being "reassuringly expensive", that is to say any open source or free application clearly can't fit the bill as it's going to be rubbish, insecure or likely both. This is obviously hogwash and every application and device should be evaluated properly to determine its suitability.

The real point here, though, is that just because a vendor has money, or many developers, that doesn't mean the system will be secure. Certainly there may be people dedicated to getting security right but that doesn't mean it'll be absolute.

Take Microsoft Active Directory's Group Policy Preferences (GPP) as an example. These were introduced with Windows Server 2008 as another way to pass settings to your users and GPP is incredibly powerful. It replaced the need to script so much and gave administrators a handy graphical tool for managing settings.

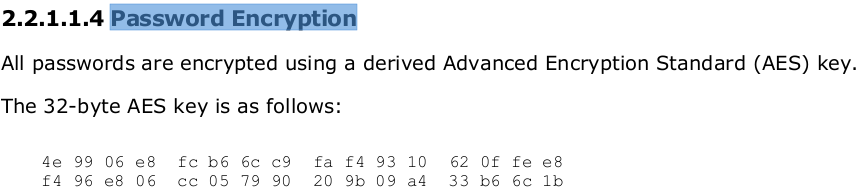

Unfortunately, saving passwords within your GPP config will lead to your downfall. Passwords are stored using a very weak key that is published on Microsoft's website. As a result, it's easy to get the password back out of the GPP configuration. Fortunately the user interface does now warn you about this risk when you configure the setting (from memory it didn't used to).

People don't always adhere to password policies

This one came from an audit I did of an organisation's active directory passwords. Worrying statistic: in the first thirteen seconds of cracking passwords I had 139 credentials. It's not a surprise that I managed to crack some, but the time it took to get so far was a shock. Policy at the organisation specified the usual password constraints (minimum length, upper and lower case characters, numbers, punctuation etc.) but also specified that for users with admin accounts not only did the admin account have to have a longer password than user accounts, they also had to be different to a person's everyday user account. In the same thirteen seconds that I obtained all those credentials I was also able to see a member of the IT team didn't adhere to this element of policy.

The same policy also stipulated that default credentials shouldn't be used - sadly that wasn't followed either.

You can't know everything

As I've remarked before, there's bound to be a server, client machine or device on the network that you either don't know about or have forgotten. IT colleagues come and go, other people provide equipment or plug cables in to be "helpful" and unless you're auditing every single device and connection every second of every day it's likely your test will turn up something.

The same applies to software projects. You may have written most of the code but can you honestly say you remember every detail? How about the frameworks and plugins that you're using? Unless those are all kept up to date a test might find no problems with your code but many with that of a third party.

I once found an office that I didn't know ICT had (we'd vacated it many years ago as far as I knew). Admittedly it wasn't a penetration test that discovered it, but it does demonstrate the point. The office contained several spare (computer) mice and power leads along with a connection to the server room's main KVM. If someone else had got in there they could have played havoc with our environment.

You probably didn't sanitise all your code

When developing software it's really important to never trust what the user gives you. It doesn't matter if you're writing a web application, banking app or calculator - if you process data from a user then you could equally be processing data from a malicious actor.

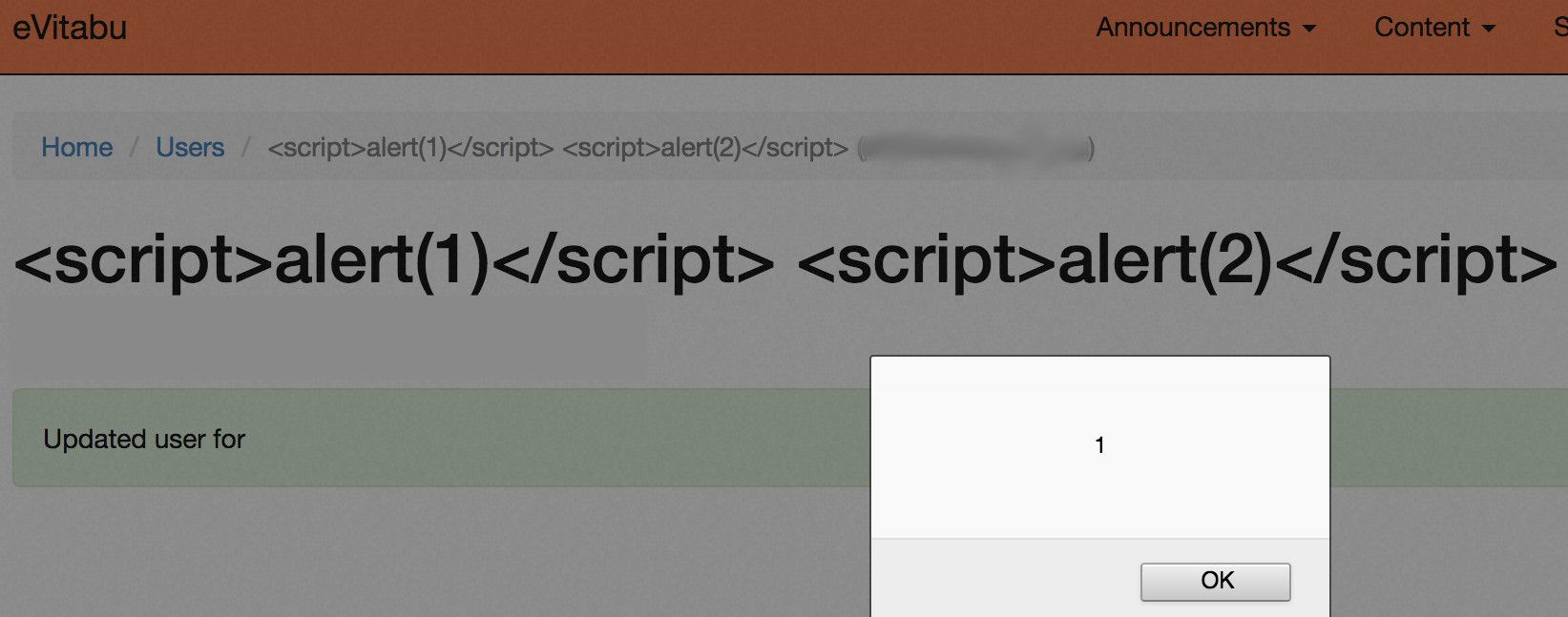

Code injection is one of the biggest problems identified for website authors by OWASP, the Open Web Application Security Project, and has been for years. Their 2017 "Top Ten" lists injection as the top flaw, as did the 2013 edition, so it's clear this vulnerability isn't going away any time soon. I'm aware of the OWASP Top Ten, I'm trained in web application penetration testing and I do my best to make sure my code isn't exploitable. Given all that, I didn't expect to get sent this by @SiberSecurity when they were testing eVitabu:

(For those unfamiliar with web application testing and vulnerabilities, that pop up with the number 1 shouldn't be there.)

I'd sanitised fields all over the place but I'd missed one, and they successfully exploited it during their test. The fix took less than five minutes but left unresolved could have caused problems when the system went live.

Don't make the mistake of thinking all your code is perfect, it never is!

Banks don't necessarily have good security

I worked for a bank briefly and during that time I was the contact person for an on site penetration test. After two days the test was completed and it showed some harsh truths - the bank I was working for had weaker security than the local government office I was working at before. I discussed the report with my boss and told him the results were, frankly, embarrassing, especially given I'd fixed the same problems in my previous job over a year ago.

The vulnerabilities weren't the most complicated to exploit but the organisation may not have known about them had there never been a penetration test before. Frustratingly I believe they had...

What next?

The important thing is to learn from your penetration test. A good tester will not be laughing at you or the organisation because they managed to gain access - the red team always wins. Take their results, implement fixes and improve your situation.